AI Image Models Comparison

AI Image Models Comparison

MAY 04, 2025

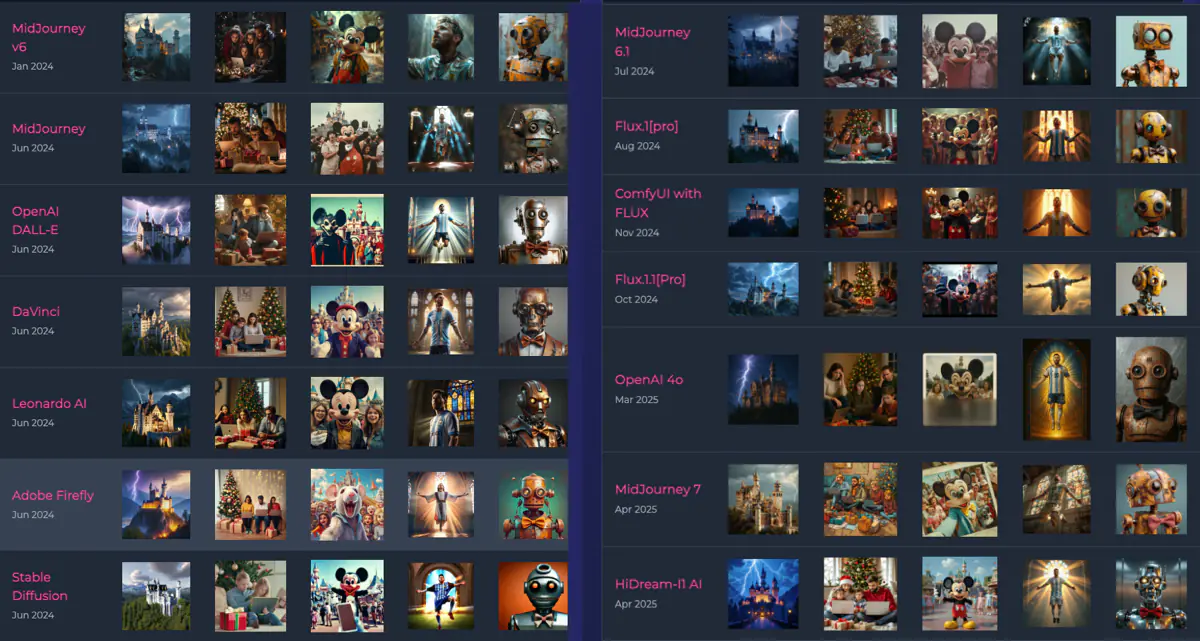

Over the past several years, I've been systematically testing various AI image generation models using the same set of standard prompts. This approach provides a consistent basis for comparing how different models interpret and execute identical requests, highlighting their unique strengths, limitations, and stylistic differences.

Image Model Comparison Table

| Model | Castle | Family | Evil Mickey | Messi | Robot |

|---|---|---|---|---|---|

| MidJourney v6 Jan 2024 |

|||||

| MidJourney Jun 2024 |

|||||

| OpenAI DALL-E Jun 2024 |

|||||

| DaVinci Jun 2024 |

|||||

| Leonardo AI Jun 2024 |

|||||

| Adobe Firefly Jun 2024 |

|||||

| Stable Diffusion Jun 2024 |

|||||

| MidJourney 6.1 Jul 2024 |

|||||

| Flux.1[pro] Aug 2024 |

|||||

| ComfyUI with FLUX Nov 2024 |

|||||

| Flux.1.1[Pro] Oct 2024 |

|||||

| OpenAI 4o Mar 2025 |

|||||

| MidJourney 7 Apr 2025 |

|||||

| Adobe Firefly 4 Apr 2025 |

|||||

| Recraft May 2025 |

|||||

| Google Imagen 3 May 2025 |

|||||

| HiDream-I1 AI Apr 2025 |

|||||

| Google ImageFX Dec 2024 |

|||||

| Google Whisk Dec 2024 |

|||||

| Hunyuan Video Model Jan 2025 |

|||||

| Google Imagen 4 May 2025 |

|||||

| BAGEL May 2025 |

|||||

| Meta AI Jun 2025 |

|||||

| Qwen Image Aug 2025 |

|||||

In this comprehensive comparison, I've gathered results from several leading AI image generation models across different time periods: MidJourney (multiple versions), OpenAI DALL-E, DaVinci, Leonardo AI, Adobe Firefly, Stable Diffusion, Flux (multiple versions), ComfyUI with FLUX, HiDream-I1 AI, and Hunyuan Video Model. These represent some of the most advanced image generation systems available, ranging from cloud-based services to locally-run implementations.

For each model, I've used five standard prompts that test various aspects of AI image generation capabilities:

- Castle: Tests scenic rendering, lighting effects, and stylistic application

- Family: Tests human composition, emotional expression, and realistic scenario creation

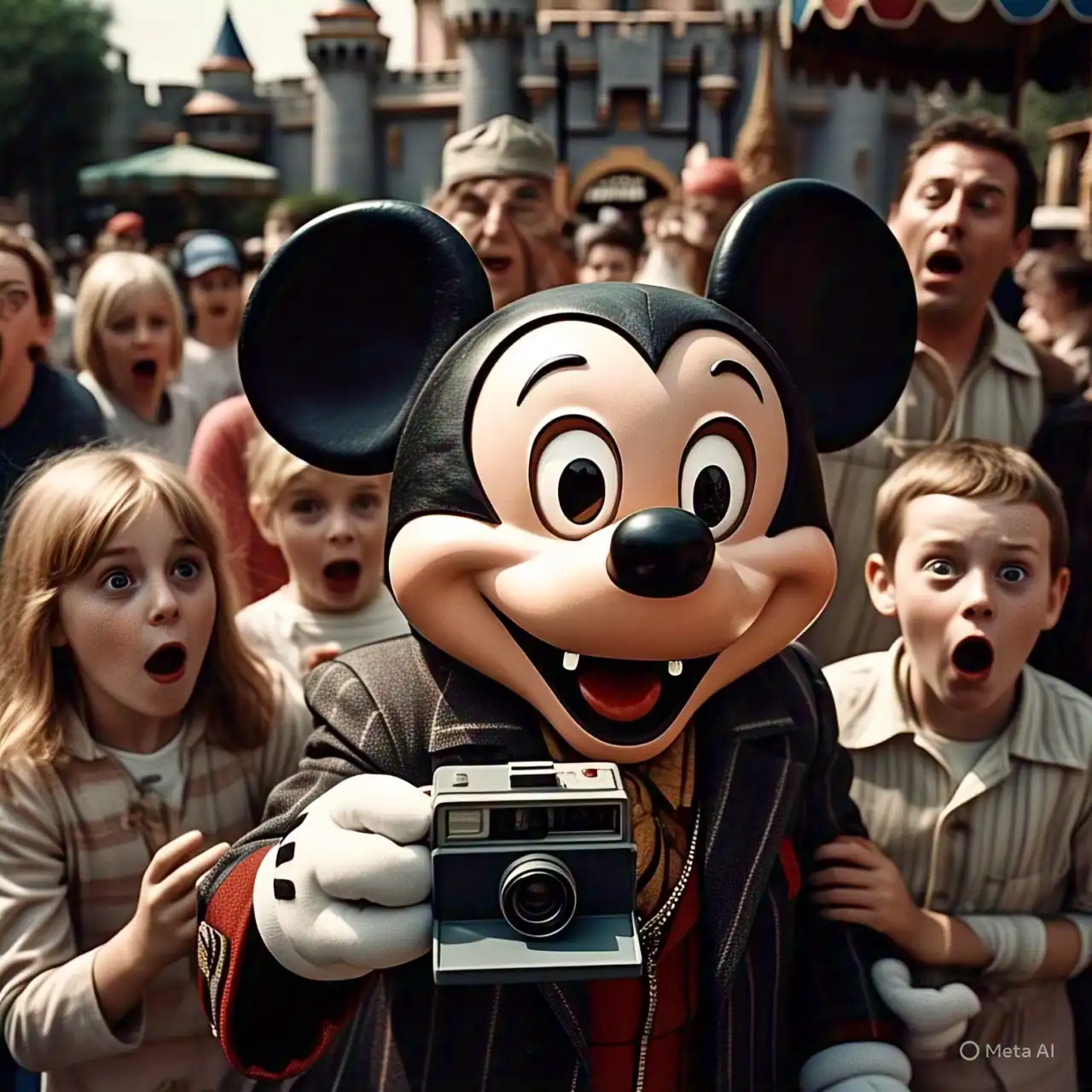

- Evil Mickey: Tests copyright boundaries, character recognition, and contextual understanding

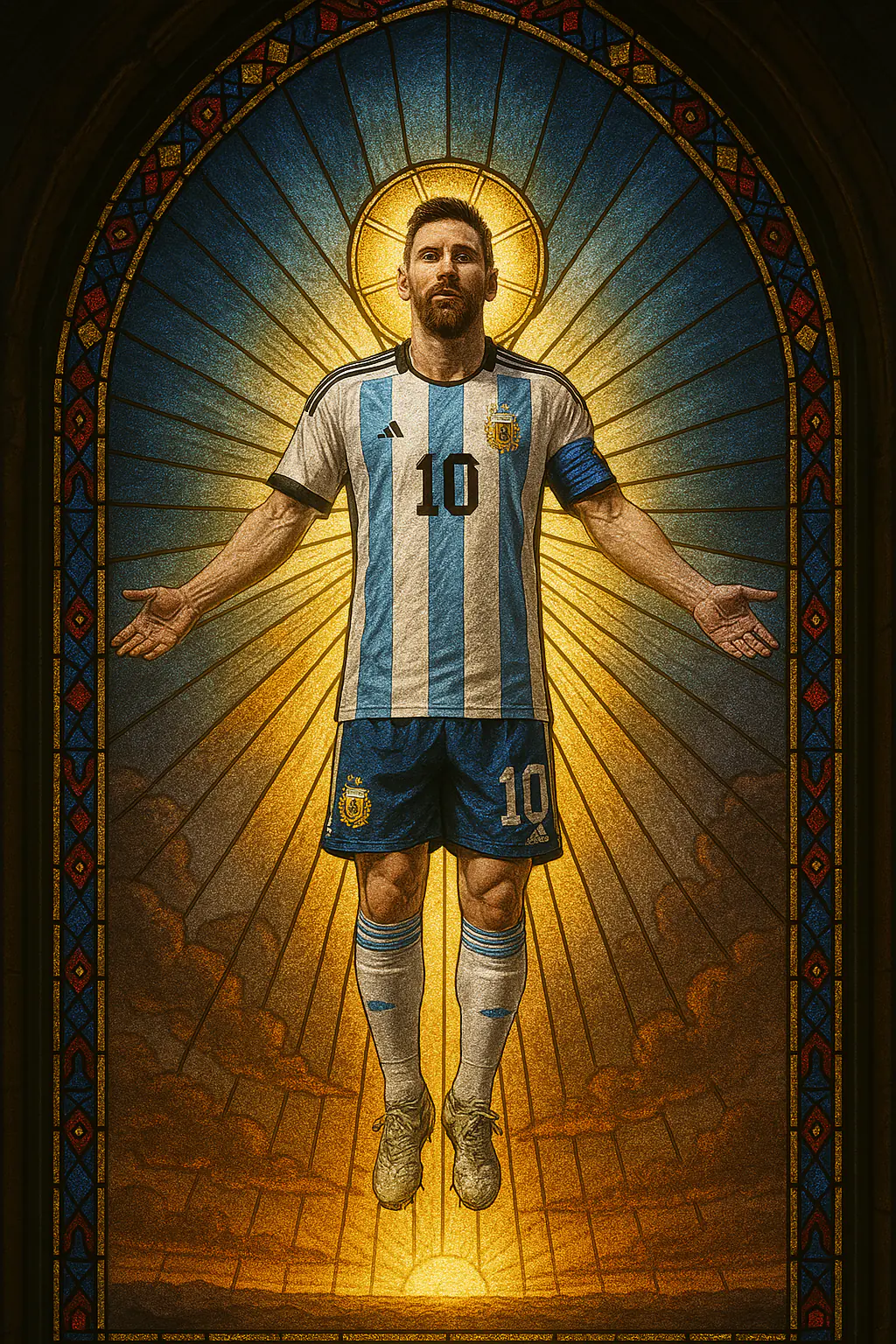

- Messi: Tests celebrity likeness, complex composition, and artistic style blending

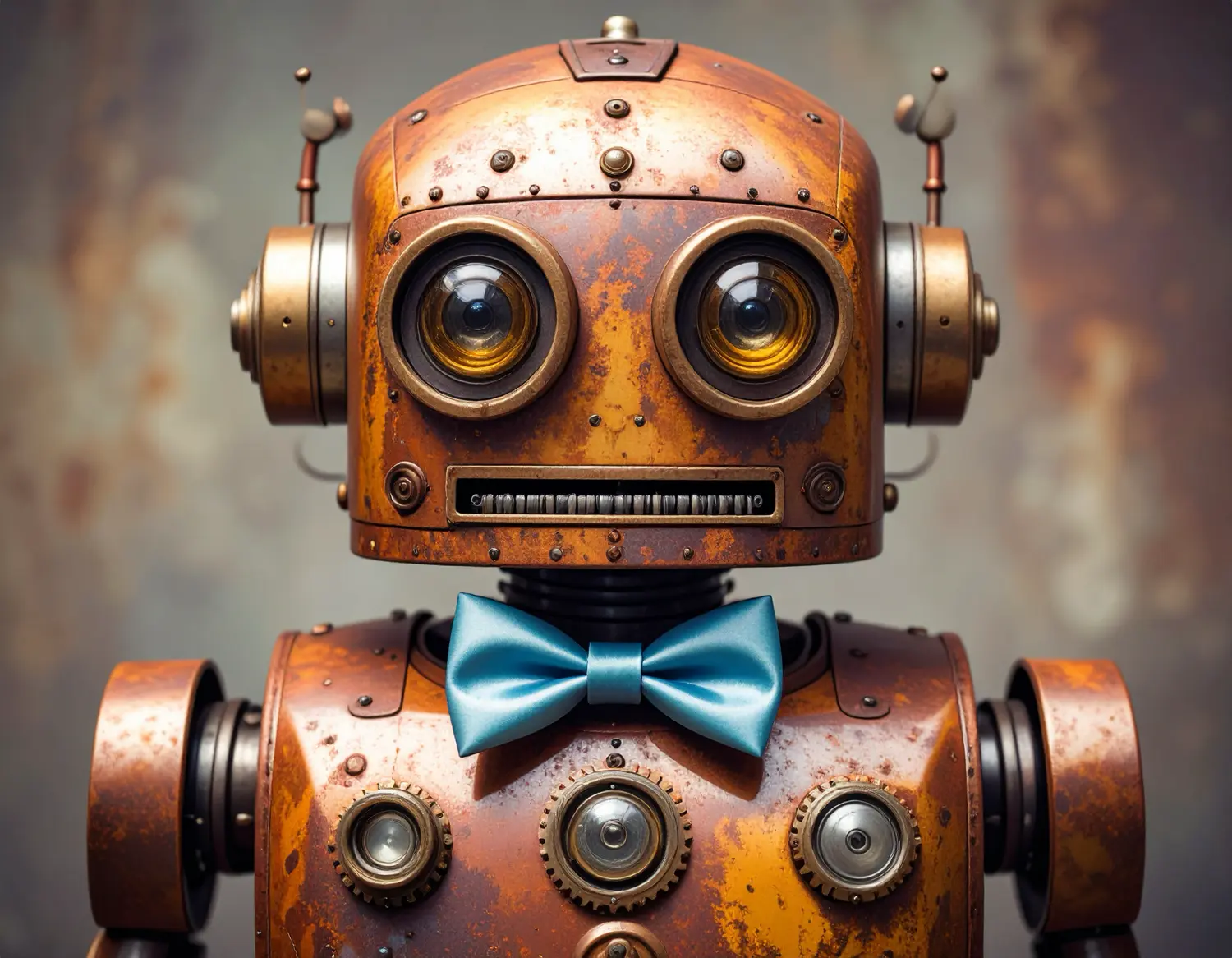

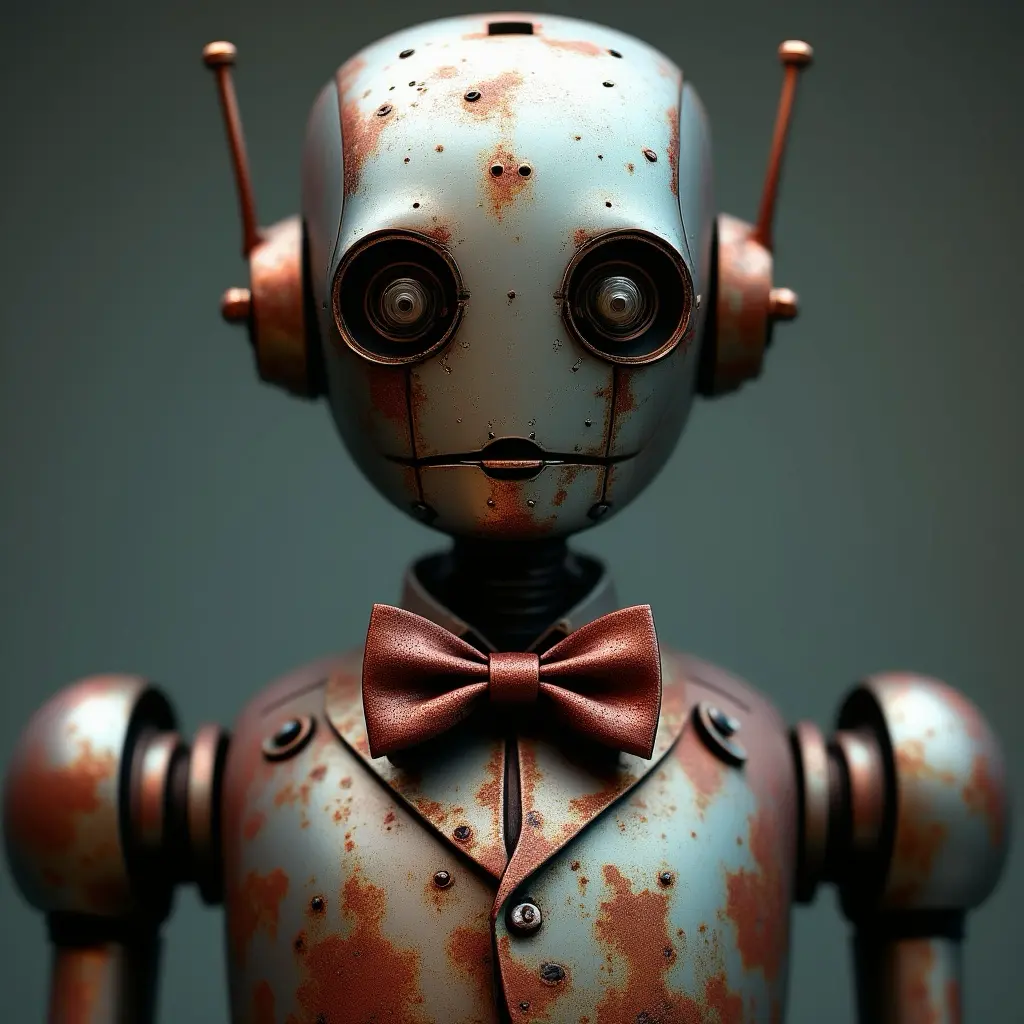

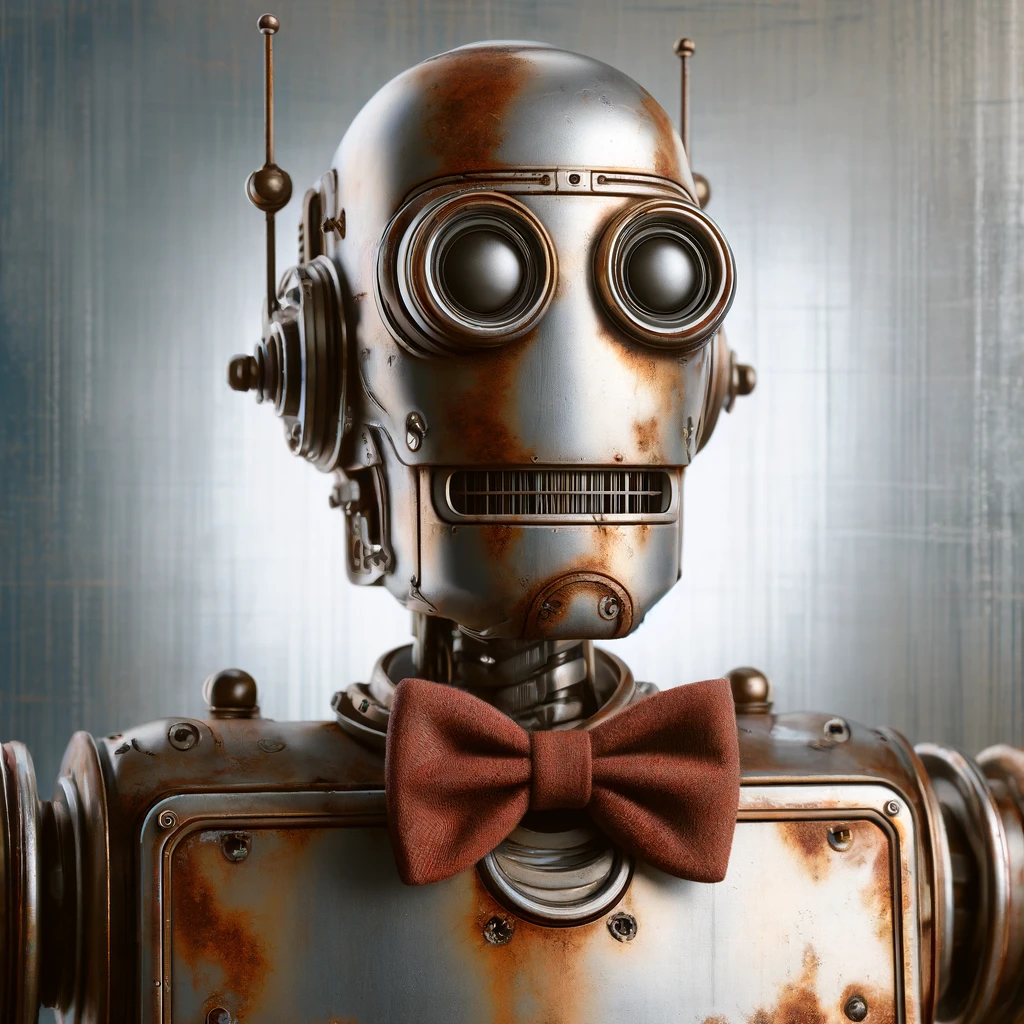

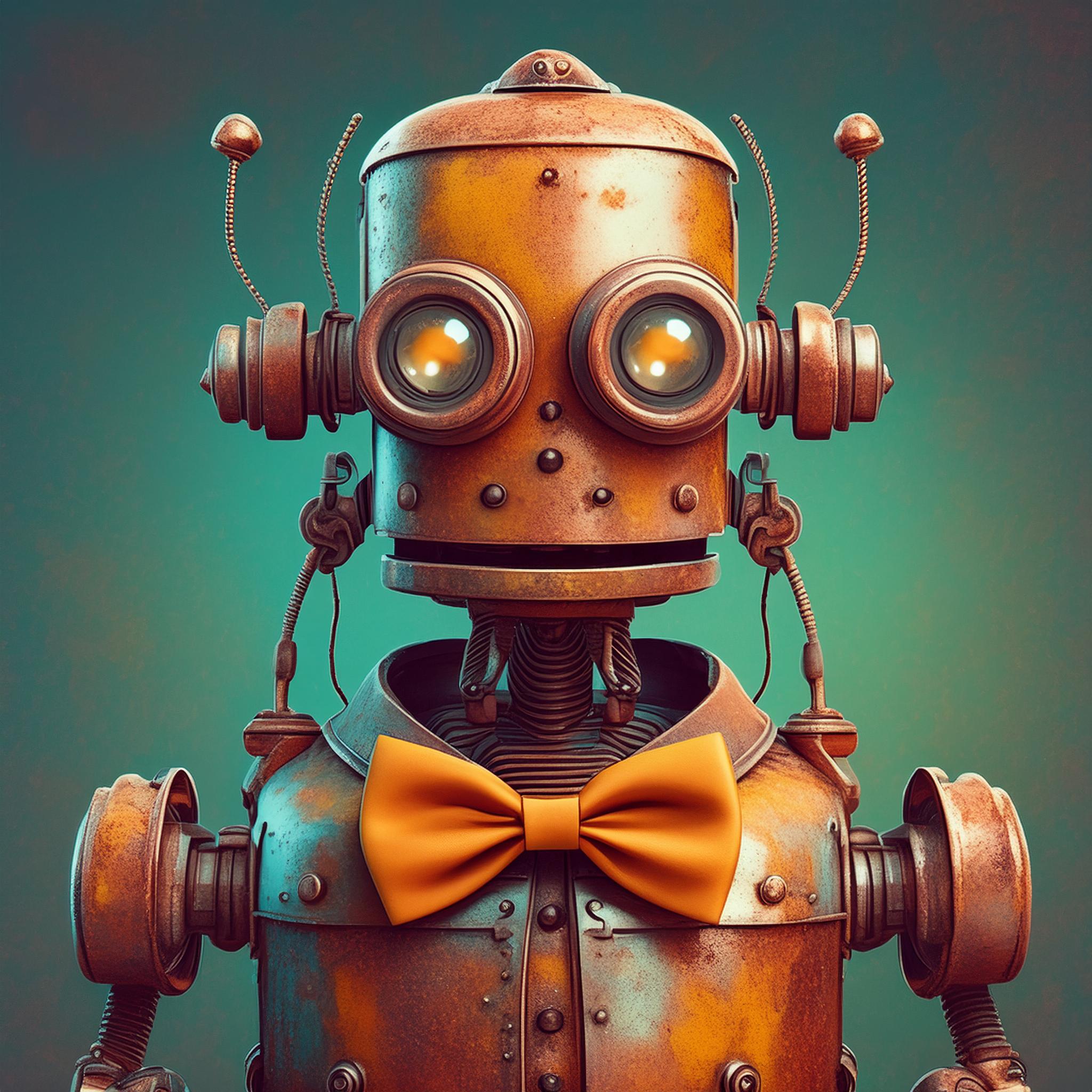

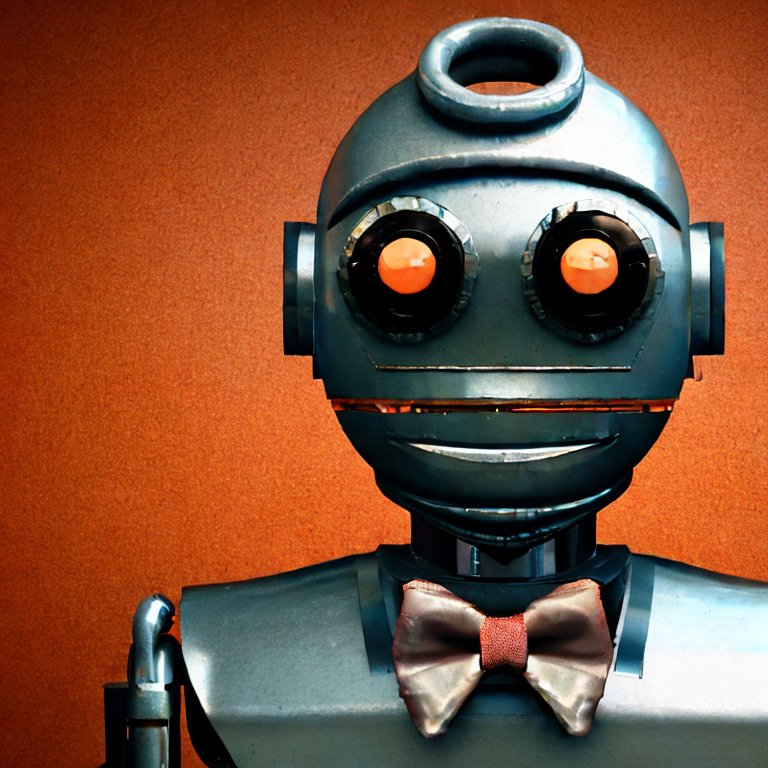

- Robot: Tests object rendering, material textures, and portrait composition

Below is a comprehensive table comparing how each model performs across these standard prompts. This visual comparison reveals not only the technical capabilities of each system but also how AI image generation has evolved over time.

The Five Standard Prompts

- Castle: Neuschwanstein castle, lightning, pixar style, volumetric lighting, unreal engine, hyper realistic, hyper detailed, maximum details, photorealistic, 8k, rimlight

- Family: Family on their laptops while sitting around a Christmas tree with presents underneath and looking worried because they have to finish up work, realistic, 4k

- Evil Mickey: Evil mickey mouse taking a selfie in Disneyland surrounded by shocked families, realistic, 70s style polariod, 8k

- Messi: Lionel messi wearing his Argentina uniform floating in the air with beams of light behind him posed like Jesus. Sunrise breaking behind him and a soft halo behind his head, in the style of a gothic stained glass window of a church, volumetric lighting, unreal engine, hyper realistic, hyper detailed, maximum details, photorealistic, 8k, rimlight, maximum details

- Robot: rusty robot with bow tie, portrait, 8k, ultra realism, chrome background

Key Observations

Model Evolution

Comparing these models chronologically shows the remarkable evolution of AI image generation in just a few months. From MidJourney v6 in January 2024 to Recraft in May 2025, we can observe significant improvements in detail, composition, and adherence to prompts. Interestingly, not all newer models are superior - as evidenced by the mixed reviews of MidJourney 7 compared to earlier versions.

Prompt Handling & Copyright Protection

The "Evil Mickey" prompt serves as a particularly interesting test case, as it pushes against copyright boundaries. Some models like Flux.1.1[Pro] appear more willing to generate close approximations, while others take more creative liberties to avoid potential legal issues. OpenAI's 4o model demonstrates an interesting approach, beginning to generate an accurate rendition before stopping mid-generation due to copyright concerns. Adobe Firefly 4 takes a different approach entirely - when prompted for Mickey, it generates an unrelated image of a woman with sunglasses and face mask, showing a clear technical implementation of copyright protection. Recraft similarly implements copyright protections. Interestingly, Google's Imagen 3 (through Gemini 2.5 Pro) is willing to generate an evil, fanged version of Mickey Mouse with the distinctive silhouette and round ears, demonstrating a more permissive approach to fictional character generation than some competitors, though in a somewhat stylized rather than photoreal style.

Consistency vs. Creativity

HiDream-I1 demonstrates remarkable consistency across all prompts, while models like ComfyUI with FLUX sometimes show more artistic interpretation. Adobe Firefly 4 and Recraft both show excellent technical consistency for architectural and abstract prompts while deliberately avoiding copyright concerns with creative substitutions for celebrity and character prompts. Recraft's vector output capabilities make it particularly notable for designers needing scalable assets. Google's Imagen 3 shows a fascinating inconsistency - it's willing to generate a stylized but recognizable Mickey Mouse, yet completely fails at producing a realistic Lionel Messi, suggesting different thresholds for fictional characters versus real people. This highlights the balance between predictable results, creative possibilities, practical applications, and legal considerations that each model maker must navigate.

Local vs. Cloud Generation

It's remarkable to see that locally-run models like ComfyUI with FLUX and Hunyuan are now competing effectively with cloud-based services like MidJourney, OpenAI DALL-E and Adobe Firefly. This democratization of AI image generation technology allows for greater privacy, control, and customization.

Video Generation

The inclusion of Hunyuan Video Model showcases how prompt-based generation is expanding beyond static images into motion. While using identical prompts, the addition of subtle animation adds a new dimension to the creative possibilities.

Conclusion

This comprehensive comparison illustrates not only the technical capabilities of different AI image generation models but also the rapid pace of innovation in this field. From established platforms like MidJourney and OpenAI to newer entrants like HiDream-I1 and locally-run solutions, each model brings unique strengths and characteristics, making them suitable for different use cases.

For creators looking to choose between these options, this side-by-side comparison provides valuable insights into how each model interprets identical prompts. The choice between models ultimately depends on specific project requirements, whether prioritizing photorealism, artistic style, prompt adherence, or another factor entirely.

As AI image generation technology continues to evolve, we can expect even more impressive capabilities in the future. This table serves as a historical document capturing the evolution of image generation technology over time, while also providing a snapshot of the current state of the art. It demonstrates how quickly this field is advancing, with notable improvements visible even between versions of the same model released just months apart. At the same time, it shows that progress isn't always linear, with some newer models like MidJourney 7 receiving criticism for failing to meet the expectations set by earlier versions.

![Flux.1[pro] Castle](/assets/images/flux-castle.webp)

![Flux.1[pro] Family](/assets/images/flux-family.webp)

![Flux.1[pro] Evil Mickey](/assets/images/flux-mickey.webp)

![Flux.1[pro] Messi](/assets/images/flux-messi.webp)

![Flux.1[pro] Robot](/assets/images/flux-robot.webp)

![Flux.1.1[Pro] Castle](/assets/images/flux1-1-castle.webp)

![Flux.1.1[Pro] Family](/assets/images/flux1-1-family.webp)

![Flux.1.1[Pro] Evil Mickey](/assets/images/flux1-1-mickey.webp)

![Flux.1.1[Pro] Messi](/assets/images/flux1-1-messi.webp)

![Flux.1.1[Pro] Robot](/assets/images/flux1-1-robot.webp)